Framework

The following low-level docs are aimed at core contributors.

We cover how to contribute to the core framework (aka the Core SDK).

If you are interested to build on top of the framework, like creating assistants or adding app level extensions, please refer to developer docs instead.

Jan Framework

At its core, Jan is a cross-platform, local-first and AI native framework that can be used to build anything.

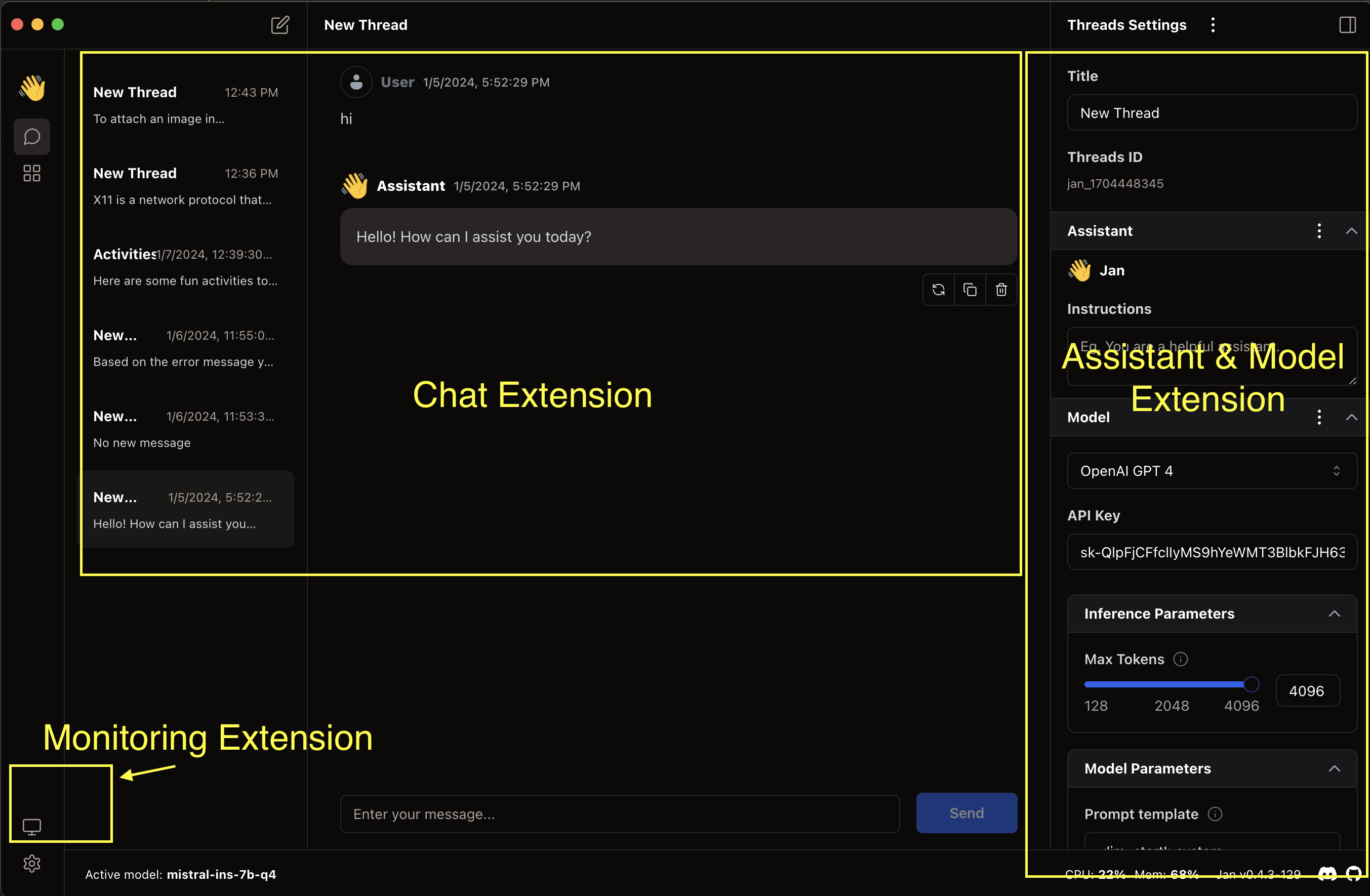

Extensions

Ultimately, we aim for a VSCode or Obsidian like SDK that allows devs to build and customize complex and ethical AI applications for any use case, in less than 30 minutes.

In fact, the current Jan Desktop Client is actually just a specific set of extensions & integrations built on top of this framework.

We encourage devs to fork, customize, and open source your improvements for the greater community.

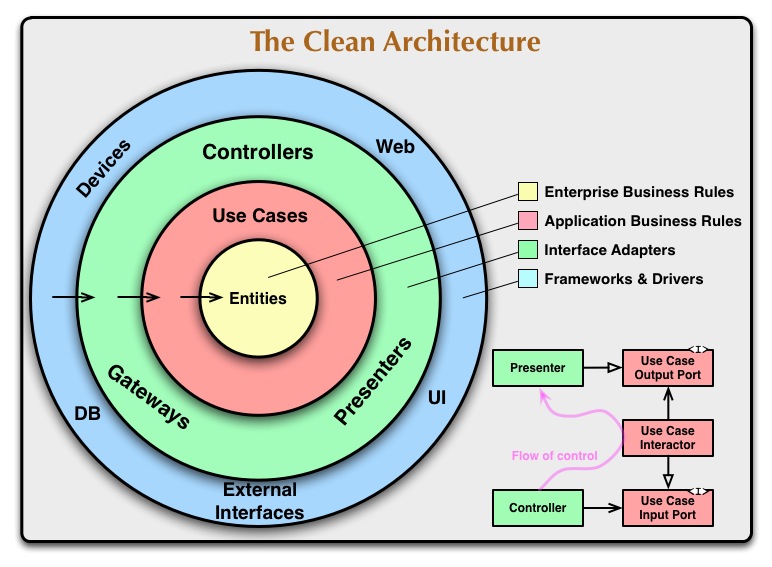

Cross Platform

Jan follows Clean Architecture to the best of our ability. Though leaky abstractions remain (we're a fast moving, open source codebase), we do our best to build an SDK that allows devs to build once, deploy everywhere.

Supported Runtimes:

Node Native Runtime, good for server side appsElectron Chromium, good for Desktop Native appsCapacitor, good for Mobile apps (planned, not built yet)Python Runtime, good for MLOps workflows (planned, not built yet)

Supported OS & Architectures:

- Mac Intel & Silicon

- Windows

- Linux (through AppImage)

- Nvidia GPUs

- AMD ROCm (coming soon)

Read more:

Local First

Jan's data persistence happens on the user's local filesystem.

We implemented abstractions on top of fs and other core modules in an opinionated way, s.t. user data is saved in a folder-based framework that lets users easily package, export, and manage their data.

Future endeavors on this front include cross device syncing, multi user experience, and more.

Long term, we want to integrate with folks working on CRDTs, e.g. Socket Runtime to deeply enable local-first software.

Read more:

Our local first approach at the moment needs a lot of work. Please don't hesitate to refactor as you make your way through the codebase.

AI Native

We believe all software applications can be natively supercharged with AI primitives and embedded AI servers.

Including:

-

OpenAI Compatible AI types and core extensions to support common functionality like making an inference call.

-

Multiple inference engines through extensions, integrations & wrappers On this, we'd like to appreciate the folks at llamacpp and TensorRT-LLM for. To which we'll continue to make commits & fixes back upstream.

Fun Project Ideas

Beyond the current Jan client and UX, the Core SDK can be used to build many other AI-powered and privacy preserving applications.

Game engine: For AI enabled character games, procedural generation gamesHealth app: For a personal healthcare app that improves habits- Got ideas? Make a PR into this docs page!

If you are interested to tackle these issues, or have suggestions for integrations and other OSS tools we can use, please hit us up in Discord.

Our open source license is copy left, which means we encourage forks to stay open source, and allow core contributors to merge things upstream.